Gazelle Master Model

Introduction

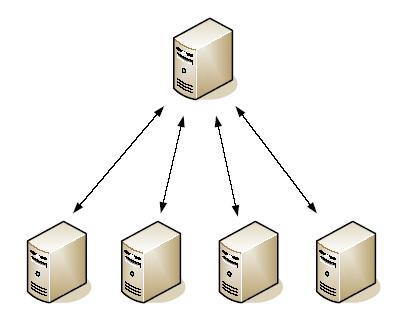

The gazelle master model manages the sharing of the model information to be used by the different Gazelle instances. Gazelle database consists of more than 190 tables. Gazelle instances are running as slaves of the master model and can request updates from the master.

Gazelle Master Model

Edition of the Technical Framework Concepts / Test definitions / Meta Tests / Dependencies.

Module that allows the user to create/read/update/delete/deprecate concepts in the master data model.

Sharing of Technical Framework

Each gazelle instance can get the update of the Technical Framework concepts from the master models.

Sharing of Test definitions

As for IHE Technical Framework concepts, sharing of test definitions is possible through the Gazelle Master Model.

Sharing of samples

Samples are used by the connect-a-thon participants to share images and documents between the creator and reader without using transactions. Files are stored into Gazelle and can be downloaded by other users. Numerous types of samples are defined, the ones are stored in Gazelle Master Model. Sharing of Links to technical referencies (available) Link (URL) to reference documents can be associated to Domain, Profile, Transactions and tupple Actor/Profile/Option. Those links are share through GMM with the clients.

Sharing of audit messages

Gazelle Master Model also lists all the audit messages defined by IHE. Under the Audit messages menu, you will access the audit messages to be created by a given actor for a specific transaction (if defined by IHE). A link to the specific audit message validator is also available from this page.

Sharing of standards

IHE defines transactions based on existing standards. In order to make a reference from a given transaction to an underlying standard, Gazelle Master Model maintains a list of standards used in the various IHE integration profiles.

TF – Overview

Project overview

The Technical Framework (TF) overview is a tool that displays a graphical interface for the navigation among the TF concepts, indicating the description of those concepts and the access to their informations page.

Web user interface

Description

-

Breadcrumb : indicates the path in the navigation among TF concepts

-

Root : the keyword of the concept selected

-

Children : results concerning the root

-

Edge : link between the root and its children

-

Description : information about the child whose the mouse is over it

-

Link to access to the information page of the concept in the description

-

To close the description

Navigation

The first graphical representation displays all domains of the Technical Framework. Then, the navigation must be done in the following order :

- all integration profiles for given domain

- all actors for given integration profile

- all transactions and all integration profile options for given actor and integration profile

- all initiatiors and responders for given transaction

A click on the keyword of a children allows to generate the graphic. A click on the root allows to go back in the navigation and it allows to generate the previous graphic.

TF - Integration profile diagram

Project overview

On the information page of an integration profile, the integration profile diagram is a graphical representation that displays the transactions between the actors for this integration profile.

Technical Framework Web user interface

Description

- diagram of actor/transaction pairs in the selected integration profile

- zoom out

- zoom in

- save the diagram on the user computer

Editing Profile Information

Introduction

Gazelle Master Model (GMM) allows administrators to add new Integration Profile information into Gazelle. This enables vendors to register testing these profiles at a Connectathon. Gazelle must be configured to know about the actors, transactions, and options defined within an Integration Profile. It must know which Domain the Integration Profile belongs to.

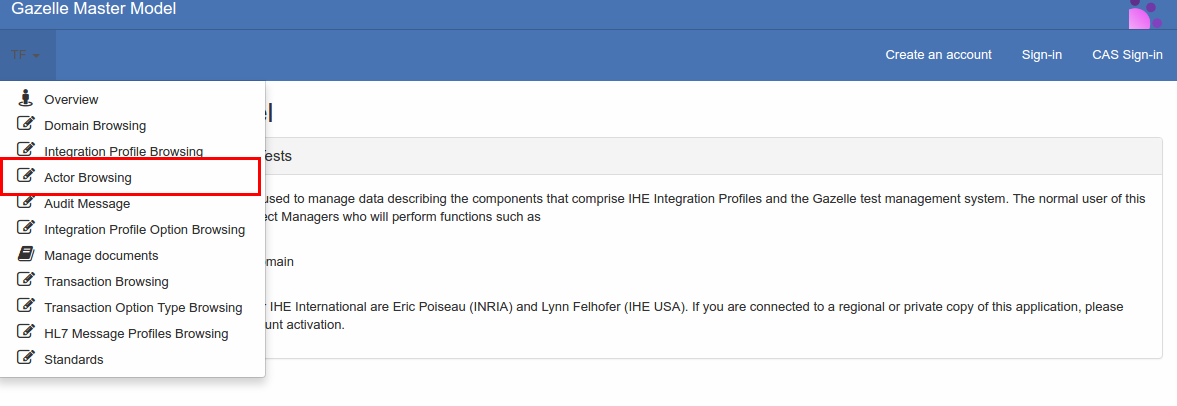

Add new actors

Gazelle is populated with Actors from Integration Profiles across all IHE domains. Prior to adding a new Actor, search the list of existing Actors to see if it already exists (eg. an Acquisition Modality or a Content Creator actor is used by many IHE Integration Profiles).

From the main menu, select TF -> Actor Management

- Search for existing Actors. There are several methods you can use:

- You can restrict the search to a specific domain by selecting a value in the Select a Domain list box

- Search by Keyword by typing into the data entry box at the top of the Keyword or Name column. (The search starts as you begin typing; there is no need to press Enter to start the search.)

- You can sort the Keyword or Name columns by clicking on the up and down triangles in the column heading.

- To add a new actor, select the Add an actor button at the top right of the page.

- On the Actor : New screen, enter:

- Keyword - This is a short form of the Actor name; it can be an abbreviation or acronym. Use all upper case letters and underscores. No spaces. (Although gazelle allows you to edit this keyword, once you complete all of your data entry, you should not change this keyword later. The dependency configuration relies on this value to remain the same.)

- Name – This is the full name of the actor from the Technical Framework.

- Description – You can copy the definition for the actor from the Technical Framework. May be left blank.

- Select the Save button to finish.

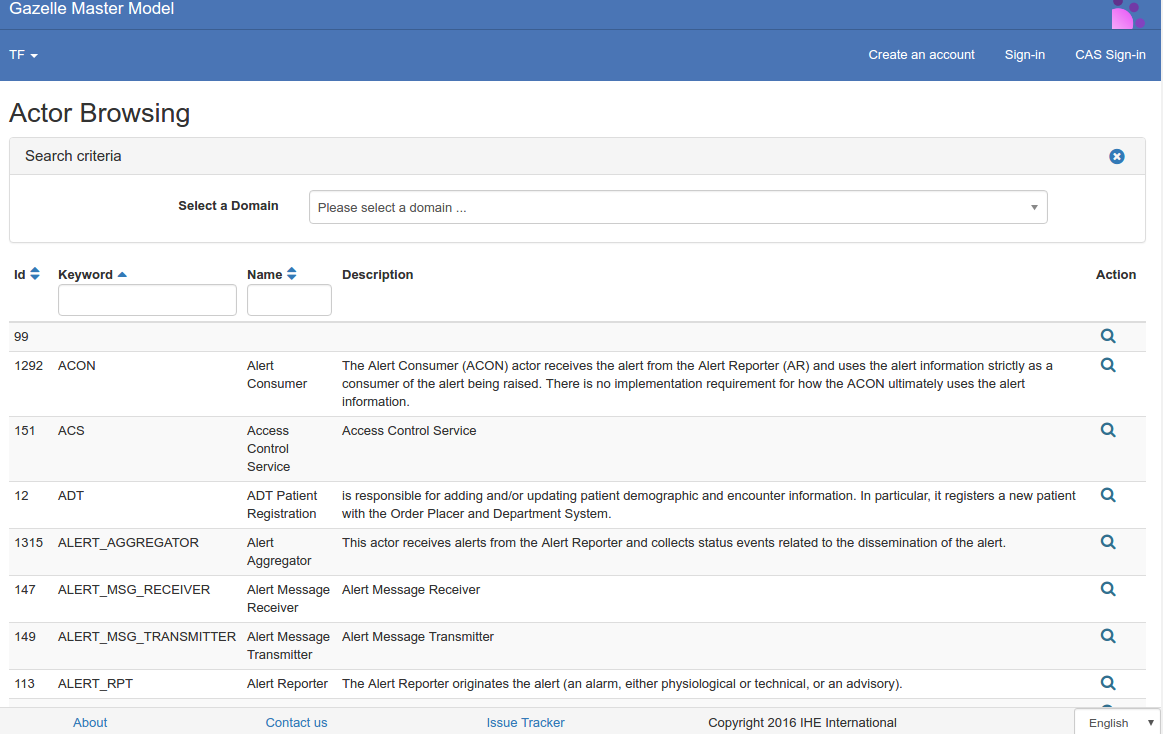

Add new transaction

Transactions, like actors, can be viewed in a list fashion accessed from the TF drop down menu.

From the main menu, select TF à Transaction Browsing

- Search for existing Transactions.

- Search by entering a Keyword. This is the abbreviation of the transaction, eg ITI-2, QRPH-1, RAD-8.

- Sort the list by Keyword or Name and page through the list using the page numbers at the bottom of the screen.

- To add a new transaction, select the Add transaction button at the top right of the page.

- On the following page, enter:

- Keyword: this is a shortened form of the Transaction containing the domain acronym followed by a dash, then a number (no spaces) Example: RAD-4

- Name: this is the full name of the transaction from the Technical Framework.

- Description: you can copy the definition for the transaction from the Technical Framework. May be left blank.

- TF Reference: the reference to the particular volume and section from the Technical Framework. May be left blank.

- Status: all new transactions entered into Gazelle will be entered as Trial Implementations. May also be Final Text.

- Click on the Save button to save your changes

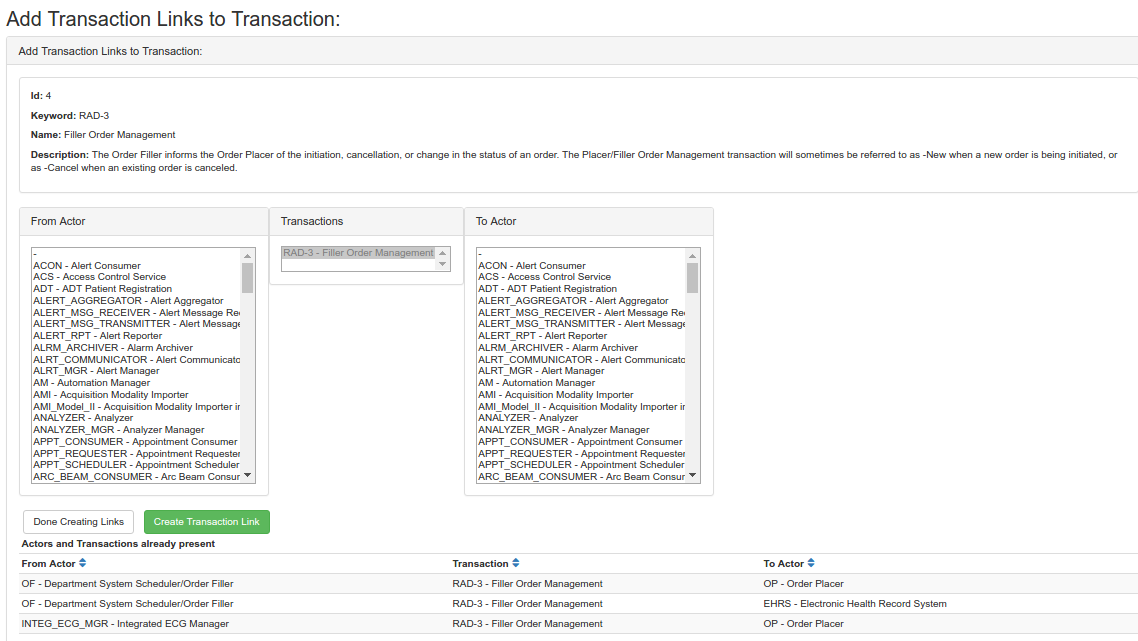

Link Transactions to Actors

Transactions occur between actors; one actor is the source and another is the destination. Gazelle is configured to know that a transaction is From an actor To another actor. can be viewed in a list fashion accessed from the TF drop down menu.

From the main menu, select TF -> Transaction Management

- Search for existing Transactions. Search by entering a Keyword. This is the abbreviation of the transaction, eg ITI-2, QRPH-1, RAD-8.

- Click on the Edit icon in the Action column for the transaction you found.

-

On the Edit Transaction page, select the Transactions Links for Transaction tab, then click the Add Transaction Links button.

-

Select the appropriate actors from the From Actor and To Actor list.

- Click the Create Transaction Link button. Repeat as needed, then click the Done creating links button

- Verify the accuracy of your data entry by going back to review the Transaction links for Transaction tab.

Not currently covered in this document, but needed in order for profile entry to be complete:

- Entering profile mandatory groupings (aka profile dependencies) ie Actor A in Profile P must also implement Actor B in profile Q (eg all actors in the XDS.b profile must also implement ATNA Secure Node)

- Entering default configurations for transactions in the profile

- Entering sample definitions

- Entering Roles for test definitions

Test Definition

Test definitions are available

- in Gazelle Master Model under Tests Definition –> Tests Definition (read/write)

- in Test Management under Tests Definiton –> Tests Definition (read only)

Test definitions are, with the technical framework, the basis of Gazelle and its important feature to prepare for and participate in a connect-a-thon. The tests define the scenarios the different actors implemented by a system must pass to be validated by the connect-a-thon managers. This section of the documentation is mostly dedicated to test editors to explain the different sections of a test and how they have to be filled when creating new tests.

Before writing a test, three main concepts have to be introduced that determine who will see the test and when.

- Test type indicates whether the test has to be performed during the connectathon or the pre-connect-a-thon period. A third type was added in order to gather the tests dedicated to HITSP profiles.

- Test status indicates the level of advancement of the test. Only “ready” tests will be displayed in the participants’ system’s tests list during the connect-a-thon or pre-connect-a-thon period. “To be completed” are test currently under development, but are not yet ready to expose to participants. “Deprecated” tests are those which are not used anymore, in the same way the storage/subsitute tests have been replaced by more relevant ones. Finally, the “convert later” status has been created when the test definitions have been imported from the Kudu system; it means that we do not need this test by now and consequently it is not a priority to work on it.

- Test Peer Type: indicates if the system can perform this test with “no peer” (no transaction to exchange with a vendor test partner), “peer to peer” are tests covering a subset of a profile, typically with 2, or sometimes 3, partners. “Group tests” cover a workflow within an integration profile and are tests run by a full set of actors within the profile; group test are typically supervised directly by a connectathon monitor.

Each test definitions is built of four parts which are defined below. Each of them is editable individually.

Test Summary

It gives general informations about the test:

- Keyword, name and the short description are used to identify the test. By convention, the keyword name starts with the profile acronym.

- The test type is connectathon, pre-connectathon but can be other type as defined in the database.

- The test status indicates the readyness of the test. Only test with a status marked as ready are visible by the testers.

- The peer type indicates is the test is of type “No peer”, “Peer to Peer” or “Group Test”

- The permanent link to the test is printed in this part (computed by Gazelle)

- The version of the test gives an indication of the most recent testing event for which the test was created/modified.

- The Is CTT attribute indicates whether the test integrates or not a CTT result.

- The Is orchestrable attribute indicates whether the test will be run against a simulator (true) or against another system under test (false). When run against a simulator, the test requires the use of Bpel service and Gazelle web services to be orchestrated. Those services will enable the simulator to communicate with Gazelle in a standalone mode without any help from somebody.

- The Is validated attribute indicates whether the test is validated or not, it’s mostly used in the context of accredited testing

Test Description

This section describes very precisely the test scenario and gives indications to the vendor on how to perform the test, which tools are required and so on. This part also gives keys to the monitor about the things to check, the message to validate and so on. This part of the test can be translated into different languages. By convention, there are three sections in the test description:

- Special Instruction: contain information for the vendor of “special” considerations for this test, for example “ABC test must be run before this one”, or “XYZ tool is used in this test”

- Description: Is a short overview of the scope of the test.

- Evaluation: These are the specific instructions to the connectathon monitor describing what evidence must be shown by the vendor in order to “pass” this test.

Test Roles

It is the most important part of the test, it is also the most complicated and confusing part of the work.

Assigning one or more Roles to a test determines which Actor/Integration Profile/Profile Option (AIPO) are involved in the test. Roles must be well-chosen for two reasons: (1) If a Role is assigned to a test, it means that the test will appear on the list of tests to do for any test system which supports the AIPO in the Role, and (2) only the transactions supported by the chosen Roles will be available when you define individual Test Steps on the next tab..

Prior to starting a test definition, you should ensure that the Test Roles you need for the test exist; if not, they can be created under Tests Definition –> Role in test management.

A test role (or role in test) is defined as a list of Actor/Integration profile/Profile Option and for each of these AIPO we must specify if the tuple is tested or not. The primary reason to include a Test Participant (ie an AIPO) in a Role with “Tested?” unchecked is because you want the transactions supported by that Test Participant (AIPO) to be used by the other test participants in that Role, but you do not want that test to show up as required for that test participant that is “not tested”. This primarily occurs when one actor is “grouped” with another actor.

The whole test role can be set as “played by a tool”, for example the OrderManager (formally RISMall) or the NIST registry or a simulator or so on.

A convention has been put in place for the naming of test roles:

| **<ACTOR_KEYWORD>_<INTEGRATION_PROFILE_KEYWORD>[_<PROFILE_OPTION_KEYWORD> | _ANY_OPTIONS][_WITH_SN][_WITH_ACTOR_KEYWORD][_HELPER]** |

If several actors from a profile or several profiles are used to defined the test role, only the main couple Actor/Integration Profile must be used to name the role.

- By ANY_OPTIONS we mean that any system implementing one of the option defined for the profile must perform the tests involving this role.

- _WITH_SN means that the transactions in which the role takes part must be run using TLS, consequently the involved actors must implement the Secure Node actor from ATNA profile. Note that, in that case, the Secure Node actor is set “not tested”, so that failling this test do not fail the Secure Node actor.

- _WITH_ACTOR_KEYWORD means that the system must support a second actor, the one is not tested, in order to perform some initialization steps. For example PEC_PAM_WITH_PDC gathers the Patient Encounter Consumer actor from the Patient Admission Management profile and the Patient Demographic Consumer from the same profile; this is required because we need to populate the database of the PEC with some data received thanks to the PDC. Keep in mind that such associations must be meaningful that means that the gathered actors are linked by an IHE dependency.

- Finally, _HELPER means that the role is not tested but is required to ensure the coherence of the test.

Here are some examples to let you better understand the naming convention:

- DOC_CONSUMER_XDS.b_ANY_OPTIONS gathers all the Document Consumer of the XDS.b profile no matter the options they support.

- IM_SWF_HELPER gathers all the Image Manager from the Schedule Workflow profile but those actors are not tested.

If the test participant is a tool or a simulator, we will used the system name as test role name: <SIMULATOR or UTILITY_NAME>, for instance ORDER_MANAGER, CENTRAL_ARCHIVE, NIST_REGISTRY and so on.

Once you have chosen the roles involved in your test, you will be asked, for each of them to give some more information such as:

- # to realize: the number of times the system must realize with success this test for the tested actor to be validated. Typically, this is “3” for peer to peer tests, and “1” for No Peer and Group tests.

- Card Min: (cardinality) how many (at least) systems with this role must be involved in the test

- Card Max: (cardinality) how many (at most) systems with this role can be involved in the test

- Optionality: Require means that the test is required for that Role Optional means that the test is optional for that role.

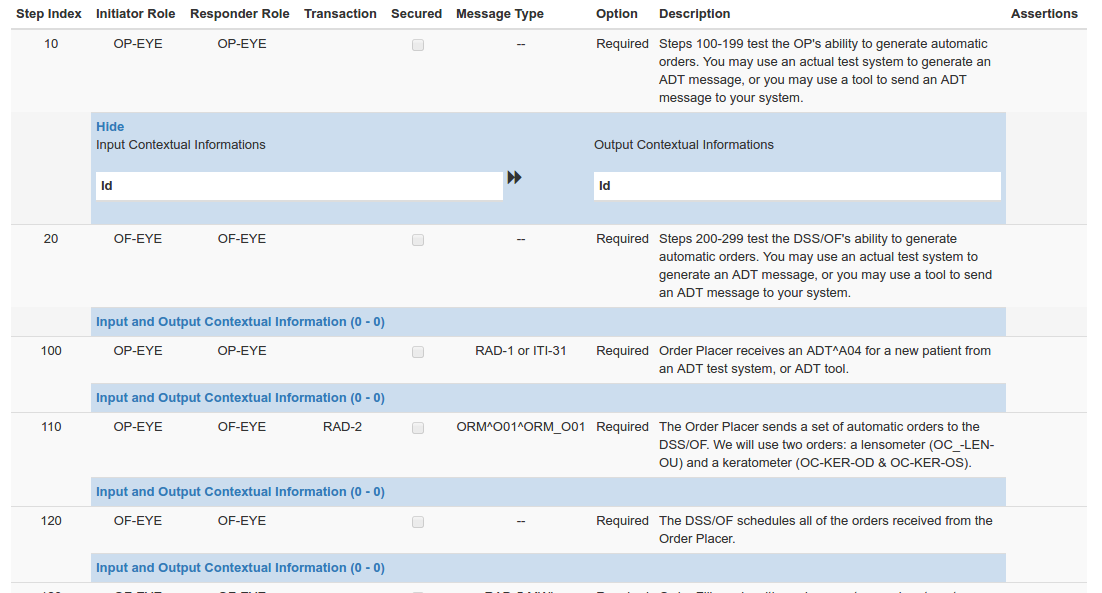

Test Steps

To help vendors with performing the test, we cut the test into small entities called test steps. In a newly defined test, when you first arrive on this page, you will find a sequence diagram only filled with the different roles you have previously defined. As you add test steps, you will be able to see the sequence diagram is automatically updated according to the steps you have defined. The red arrows stand for secured transaction (TLS set to true)

Test steps are ordered based on the step index, in most of the cases, vendors will have to respect the given order, especially if the test is run against a simulator.

Each step is described as follows:

- Step index: index of the step

- Initiator role: test role in charge of initiating the transaction (only roles with actors which are initiators for at least one transaction can be used as initiator role)

- Responder role: test role acting as the receiver for this step (only roles with actors which are responders for at least one transaction can be used as responder role)

- Transaction: the transaction to perform by the two actors

- Secured: indicates whether the transaction must be perform over TLS or not

- Message type: the message sent by the initiator

- Option: indicates whether the test step is required or not

- Description: detailed instructions on how to perform the step

When editing a step, you can choose to check or not the Auto Response box. When it is checked, it indicates that the selected role has to perform a step alone (initialization, log …), no transaction nor message type have to be specified.

In order not to waste time editing steps for a little change, the step index field, secured checkbox, option selection and description fields can be filled from the main page of test steps. The change is recorded in database each time you lose the focus of the modified field.

If you have chosen to write an orchestrated test, that means that the system under test will communicate with a simulator, you may have to enter some more informations called “Contextual Information”. In some cases, those informations are needed by the simulator to build a message which match the system configuration or data. This can be used to specifiy a patient ID known by the system under test for instance.

Two kinds of contextual informations are defined:

- Input Contextual Information: The information provided to the simulator

- Output Contextual Information: The information sent back by the simulator and that can be used as input contextual information for the next steps

For each contextual information, you are expected to provide the label of the field and the path (it can be XPath or HL7 path if you need to feed a specific XML element or HL7v2 message segment). A default value can also be set.

If you have defined output contextual informations for previous steps, you can use them as input contextual information for next steps by importing them, as it is shown on the capture below. So that, the simulator will received the return of a previous step as new information and will be able to build next messages.

For more details about the expectation of simulators, read the developer manual of the simulator you want to involve in your test. A short example based on XCA Initiating Gateway Simulator use is given below.

XCA Initiating Gateway supports two transactions: ITI-38 for querying the responding gateway about the documents for a specific patient and ITI-39 to retrieve those documents. In a first step we may ask the responding gateway for the documents of patient 1234^^^&1.2.3.4.5.6&ISO, in the second step we will ask the responding gateway to send the first retrieved document.

| value | label | path | value |

|---|---|---|---|

| step 1: *Input Contextual Information * | XDSDocumentEntryPatientId | $XDSDocumentEntry.patientId | 1234^^^&1.2.3.4.5.6&ISO |

| step 1: Output Contextual Information | XDSDocumentEntryUniqueId | $XDSDocumentEntry.uniqueId | 7.9.0.1.2.3.4 |

| step 2: Input Contextual Information | XDSDocumentEntryUniqueId | $XDSDocumentEntry.uniqueId | 7.9.0.1.2.3.4 |

In this way, no action on the simulator side is required from the vendor, he/she only has to set up his/her system under test and give the first input contextual information to the simulator through the Test Management user interface.

Meta tests

In some Peer to Peer tests, the transactions supported by one Role are identical across multiple different tests, yet that Role’s partners across those tests are different. This is best illustrated by an example: In Cardiology and Radiology workflow profiles, a scheduling system (Order Filler Role) profiles a worklist to various imaging systems (Modality Roles). A vendors’ Order Filler may play the Order Filler Role in Radiology SWF profile and Cardiology ECHO, CATH and STRESS profiles. If the Order Filler may be assigned a Peer to Peer “worklist” test with modalities in each of these profiles. This could result in 12 worklist tests to pass for the Order Filler (3 worklist tests x 4 profiles). Meta Tests allow test definers to eliminate this kind of redundant testing.

Meta tests are special tests are built of equivalent test definitions for a given test role. Actually, we try not to duplicate tests but it can happen that two different tests are the same according the point of view of one test role involved in both. In that case, we merge the two tests under one Meta Test for this specific role.

When a vendor sees a Meta Test in his/her system’s test list the equivalent tests are listed within the meta test. He/she is allowed to perform 3 instances of any of the tests within the meta test instead of three instances for each individual test.. That means that if the meta test is composed of 4 tests, the involved actor is expected to have any combination of 3 instances verified,

Meta tests are defined in gazelle under Test Definition –> Meta test list. A Meta test is given a keyword and a short description; then the equivalent tests are linked to the meta test.

As an example, let’s take the meta test with keyword Meta_Doc_Repository_Load. This Meta test gathers four tests defined, among other, for the Document Repository actor of the XDS-I.b profile. Each of these tests ask this actor to perform the RAD-68 and ITI-42 transactions against an actor supporting several options. From the point of view of the Document Repository, those four tests are equivalent since we are testing four times the same transactions. Consequently, running only three of the twelve instances it would have had to do is enough to be successfully graded.

Audit messages

Some systems are required to produce audit log when a given action is performed. In order to help the implementers with knowing what are the audit messages to be produced by their systems, Gazelle Master Model maintains the list of audit messages. An audit message in GMM consists in

- a trigger event

- an actor (the one which is asked to produce the message)

- a transaction (the one to be audited)

- an event code type (if the trigger event is not enough to distinguish the audit message from another)

- a free text comment

- a reference to the technical framework (link to the section in IHE TF which defines the content of the message)

- a link to the specific validator (OID of the validator in GSS)

Standards

The underlying standards are referenced at transaction level. All the standards used by IHE are gathered under the TF –> Standards menu. A standard consists in

- a keyword (unique through the list)

- a name

- a version

- a network communication type

- an URL to the standard specification

Configuration of master model slaves

This page provides the instructions on how to add a slave application to the master model.

Pre-requisite

slony 2.0.6 : The version of slony on the slave and on the master shall be identical. Currently the version in use is 2.0.6. Run the following command to find out the version you are running

## log onto master

admin@master:~$ slon -v

slon version 2.0.6

## log onto slave

admin@slave:~$ slon -v

slon version 2.0.6

postgresql : the version of postgresql on the slave and on the master are not required to be identical but shall be from the same serie. The administrator of the master will tell you which version to use on your slave. The master system needs to access the database on the slave. This is achieved by configuring the file pg_hba.conf on the slave.

bash

\# TYPE DATABASE USER CIDR-ADDRESS METHOD

host gazelle-on-slave gazelle gazelle-master-ip/32 md5

where gazelle-on-slave is the name of the gazelle database on the slave and gazelle-master-ip the IP address of the master; the latter will be communicated by the administrator of the master. When the configuration of the slave is succesful then you should be able to run the following command psql -h slave -U username gazelle-on-slave and access the remote database.

One this level of configuration is reach we can start configuring slony on the master and on the slave.

Initialisation of slony on the master system

On the master, a slony directry is available at the root of gazelle’s home. The slony initialisation script is stored in the file : slonik_init.sk. This file shall be executable. When this script is run it creates a new schema on each of the nodes (slaves and master). If you need to rerun the script, make sure that you delete the schema from each of the nodes: DROP SCHEMA "\_TF" CASCADE ;DROP SCHEMA "\_TF" CASCADE ;

Pattern for file : slonik_init.sk

#!/usr/bin/slonik

define CLUSTER TF;

define PRIMARY 1;

define SLAVE1 10;

define SLAVE2 20;

define SLAVE3 30;

cluster name = @CLUSTER;

# Here we declare how to access each of the nodes. Master is PRIMARY and others are the slaves.

node @PRIMARY admin conninfo = 'dbname=master-model host=%master-host-name% user=gazelle password=XXXXXX';

node @SLAVE1 admin conninfo = 'dbname=slave1-db host=%slave1-host-name% user=gazelle password=XXXXXX';

node @SLAVE2 admin conninfo = 'dbname=slave2-db host=%slave2-host-name% user=gazelle password=XXXXXX';

node @SLAVE3 admin conninfo = 'dbname=slave3-db host=%slave3-host-name% user=gazelle password=XXXXXX';

# Initialisation of the cluster

init cluster (id=@PRIMARY, comment='Gazelle Master Model');

# Declaration of the slaves

store node (id=@SLAVE1, event node=@PRIMARY, comment='Slave #1');

store node (id=@SLAVE2, event node=@PRIMARY, comment='Slave #2');

store node (id=@SLAVE3, event node=@PRIMARY, comment='Slave #3');

# Define the path from Slaves to Master

store path (server=@PRIMARY, client=@SLAVE1, conninfo='dbname=master-model host=%master-host-name% user=gazelle');

store path (server=@PRIMARY, client=@SLAVE2, conninfo='dbname=master-model host=%master-host-name% user=gazelle');

store path (server=@PRIMARY, client=@SLAVE3, conninfo='dbname=master-model host=%master-host-name% user=gazelle');

# Define the path from Master to Slaves

store path (server=@SLAVE1, client=@PRIMARY, conninfo='dbname=slave1-db host=%slave1-host-name% user=gazelle');

store path (server=@SLAVE2, client=@PRIMARY, conninfo='dbname=slave2-db host=%slave2-host-name% user=gazelle');

store path (server=@SLAVE3, client=@PRIMARY, conninfo='dbname=slave3-db host=%slave3-host-name% user=gazelle');

The next file to consider is : script_server.sk. This file shall be maintained as the schema of Gazelle Master Model changes.

#!/usr/bin/slonik

define CLUSTER TF;

define PRIMARY 1;

define SLAVE1 10;

define SLAVE2 20;

define SLAVE3 30;

cluster name = @CLUSTER;

# Here we declare how to access each of the nodes. Master is PRIMARY and others are the slaves.

node @PRIMARY admin conninfo = 'dbname=master-model host=%master-host-name% user=gazelle';

node @SLAVE1 admin conninfo = 'dbname=slave1-db host=%slave1-host-name% user=gazelle';

node @SLAVE2 admin conninfo = 'dbname=slave2-db host=%slave2-host-name% user=gazelle';

node @SLAVE3 admin conninfo = 'dbname=slave3-db host=%slave3-host-name%user=gazelle';

# We need 2 sets: One for the Technical Framework (TF) part and one for the Test Definition (Test Management = TM) part

create set (id=1, origin=@PRIMARY, comment='TF');

create set (id=2, origin=@PRIMARY, comment='TM');

# Assign the table and sequences to each of the nodes (this file is not complete, use the current version available on the slave)

set add table (id=176, set id=1, origin = @PRIMARY, fully qualified name = 'public.revinfo', comment = 'table');

set add table (id=175, set id=1, origin = @PRIMARY, fully qualified name = 'public.tf\_actor\_aud', comment = 'table');

set add sequence (id=2, set id=1, origin = @PRIMARY, fully qualified name = 'public.tf\_actor\_id\_seq', comment = 'seq');

# Then for each slave we tell to start the sync

# example for SLAVE1 to be repeated for each slave

subscribe set (id = 1, provider = @PRIMARY, receiver = @SLAVE1);

sync(id=@PRIMARY);

wait for event(origin=@PRIMARY, confirmed=@SLAVE1, wait on=@PRIMARY);

subscribe set (id = 2, provider = @PRIMARY, receiver = @SLAVE1);

sync(id=@PRIMARY);

wait for event(origin=@PRIMARY, confirmed=@SLAVE1, wait on=@PRIMARY);

# end of section to repeat

Initialization of slony on the slaves

Starting the slon process is not an easy command, so a script has been written and is available on each of the slaves to execute the command.

nohup slon TF "dbname=gazelle-on-slave user=gazelle" > slonysynch.log

Instruction

- Add and edit the install_slony.sh in the SLAVE VM, check the version of slony and postgresql, they must be the same on both machine (SLAVE machine and MASTER machine). the script is available here.

-

Then add the ssh key of the MASTER machine in the slave machine.

- On the MASTER machine configure your SLAVE machine at slony/script/.

- First add you slave definition in this file definition.inc. Master is PRIMARY and others nodes are the slaves.

define EXAMPLE 31;

- Then you must create 3 files : disconnect_EXAMPLE.sk, replicate_EXAMPLE.sk and init_EXAMPLE.sk

-

You can copy an older configuration and just update the database name, user, password, domain name And define data table which need a slony replication.

- On the master machine, edit /etc/postgresql/9.6/main/pg_hba.conf and add at the end

host master-model gazelle slave_ip_machine trust

Then you must reload your postgres cluster :

/usr/lib/postgresql/9.6/bin/pg_ctl reload -D /var/lib/postgresql/9.6/main/

- On the slave machine, edit /etc/postgresql/9.6/main/pg_hba.conf and add at the end

host gazelle gazelle master_ip_machine trust

Then you must reload your postgres cluster :

/usr/lib/postgresql/9.6/bin/pg_ctl reload -D /var/lib/postgresql/9.6/main/

-

On the slave machine execute the script configure, and check that they no missing any services or configuration. the script must no return any error,

-

You can run this command for check if you can access to the master machine from the slave

psql -U gazelle -h ovh4.ihe-europe.net master-model

- Before to run slony, you must to add your configuration in the script “re-init-slony.sh”

So on the master machine you must to add in the part :

- replicate : replicate_EXAMPLE.sk

- init : init_EXAMPLE.sk

- disconnect : disconnect_EXAMPLE.sk

when all is ok you can run re-init-slony.sh

the script take approximately 1 hour, and must no return error.

- Note that if the slony replication encounters an issue with a SLAVE VM , the slony replication doesn’t work for all other slave

Launching the synchronization or restarting the synchronization

If you are launching the synchronization for the first time (seen from the master) then you can start from point 4. At any point in the process if you encounter an error, you will need to restart from 1.

- kill the slon process on all slaves : killall slon

- kill the slon process on the master : killall slon

- drop the “_TF” schema in all slaves databases

- on the master run the slonik_init.sk script.

- on each of the slaves start the slon processes

- on the master start the slon process

- on the master run the script_server.sk script

- check the log files on each of the slaves and the master in order to make sure that the synchronization is actually taking place.